Introduction

This article will introduce the artificial intelligence concepts used in a large number of games. You will learn about the tools available to solve AI problems, how they work together, and how you will implement these tools in the language or engine of your choice.

This article requires you to have a basic understanding of the game, and at the same time master some mathematical concepts, such as geometry, trigonometric functions and so on. The code examples cited are all virtual codes, so there is no need to have a special programming language level.

What is game AI

The game AI mainly solves what actions the game character should make according to the current conditions. The agent referred to in the traditional AI environment is usually the person in the game, but now it can also refer to a car, a robot or more abstract, such as a group of target objects, a country or a population. They all need to observe the surrounding environment and make decisions and perform actions based on the environment. Usually this process is a cycle of perception/thinking/action.

Sense: The agent detects or is informed of objects in the environment that may affect its actions (there are dangers nearby, items to be picked up, places to be viewed)

Think: The agent must figure out how to deal with it (for example, choose a safe time to pick up something, or decide whether to attack or hide first)

Action (Act): The agent puts the previous decision into action (for example, starting to move to the enemy or target object)

Environment changes, repeat this cycle under new data...

In real AI issues, most companies focus on the "perception" link. For example, a self-driving car must take a picture of the road ahead, compare it with radar or LIDAR data, and then try to interpret what they see. This is usually done by machine learning methods.

Games are different because they don't require complex systems to extract information, because most of the information is inside the simulation system. We don't need to run the image recognition algorithm to find the enemy's location; the game knows where the enemy is and can directly feed the information back to the decision-making process. So the "perception" part of the game is relatively simple, but the hard part is the last two steps.

Limitations on the development of game AI

AI in games usually faces the following limitations:

It is usually not "pre-trained" like a machine learning algorithm; it is unrealistic to write a neural network to observe thousands of players and learn, because this method simply cannot be done before the game is released.

The game should provide fun and challenge, not the pursuit of "best". Therefore, even if the level of an agent can exceed that of a human, it will not be the goal pursued by the designer.

The agent shouldn't be too "mechanical" to make the opponent really think that his teammates are "humans" rather than "machines." Although AlphaGo is powerful, the players who played against it felt that its way of playing chess was unusual, and it felt like playing against aliens. Therefore, the game AI should also be adjusted to be more "anthropomorphic".

To support real-time processing, the algorithm cannot occupy the GPU for a long time just to come up with countermeasures. 10 milliseconds is too long.

The ideal system is data-driven rather than hard-coded, so that even people who don’t know how to program can make adjustments.

Armed with these principles, we can begin to look at the simple AI methods used in the cycle of perception, thinking, and action.

Basic decision making

Let's take the simple game "Pong" as an example. Make sure that the table tennis ball can bounce on the racket and lose if it falls. The task of AI is to determine the direction of movement of the racket.

Hard-coded conditional statements

If we use AI to control the racket, then the most direct and easiest way is to try to keep the racket under the table tennis ball. When the ball touches the racket, the position of the racket is appropriate.

Expressed by virtual code:

every frame/update while the game is running:

if the ball is to the left of the paddle:

move the paddle left

else if the ball is to the right of the paddle:

move the paddle right

This method is too simple, but in the code:

There are two "if" statements in the "perception" part. The game knows the position of the ball and the racket, so the AI ​​asks the position of the game to perceive the direction of the ball.

The "thinking" part is also in the two "if" statements. It consists of two decisions, which will ultimately decide whether to move the racket to the left, right or not.

"Action" in the code is to move left or right. But in other dramas, it may include the speed of movement.

We call this method "reactive" because the rules are simple.

Decision tree

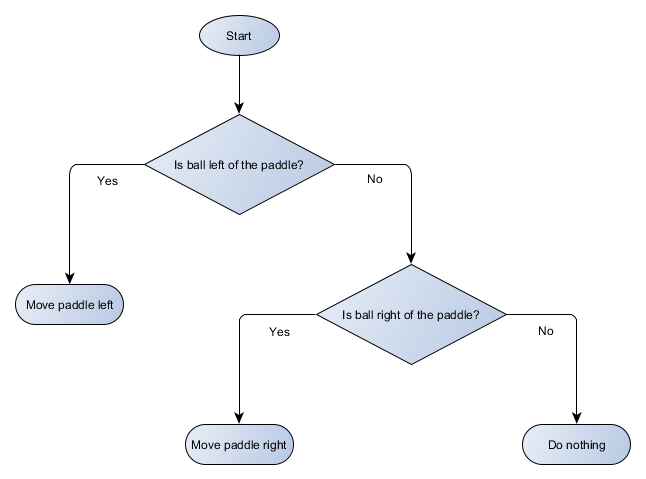

The table tennis game can also be visualized with a decision tree model:

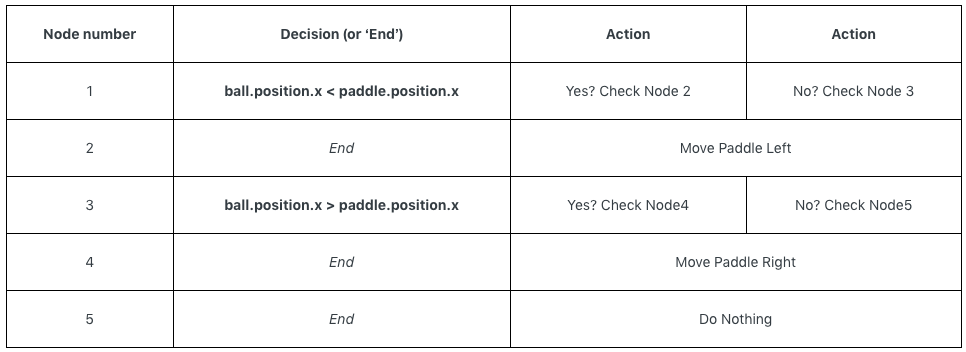

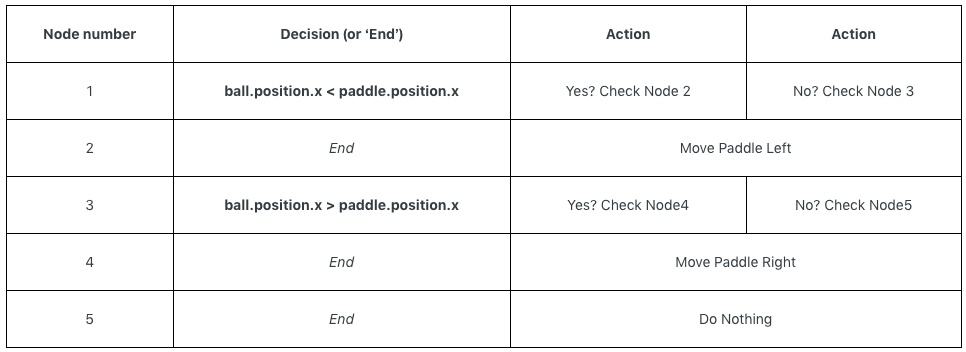

At first glance, you may think that a decision tree is no different from an if statement. But in this system, each decision includes a precise condition and two possible outputs, allowing developers to build AI from the data representing the decision tree without hard coding. A simple table to describe the decision tree looks like this:

If there are a lot of samples, the decision tree is really powerful and can efficiently distinguish situations.

Scripting

The people who design AI can arrange the decision tree according to their own ideas, but they must rely on the required conditions and actions provided by the programmer. What if we give designers better tools to allow them to create their own conditions?

The programmer can change the condition (judging whether the ball is on the left or the right of the racket) to a certain value, and the data of the decision tree may look like this:

Same as the table above, but the decisions have their own codes. Furthermore, it is possible and common to bring such expressions (using scripting language instead of data) to logical conclusions.

React to events

In the above ping-pong game, the core idea is to continuously run a three-step loop and take actions based on the state of the environment in the previous step. But in more complex games, it is more to react to the situation than to evaluate various conditions, which is very common in the change of game scenes.

For example, in a shooting game, the enemy is first static. Once they find the player, the enemies of different characters will take different actions. The stormtroopers may attack the player, and the sniper will be behind and ready to shoot. This is also a basic response system, but it requires a more advanced decision-making process.

Advanced decision making

Sometimes, we want to make different decisions based on the current state of the agent. For a decision tree or script, if there are too many conditions, it will not run efficiently. Sometimes, we have to think in advance and estimate how the environment will change, so more complicated methods are needed.

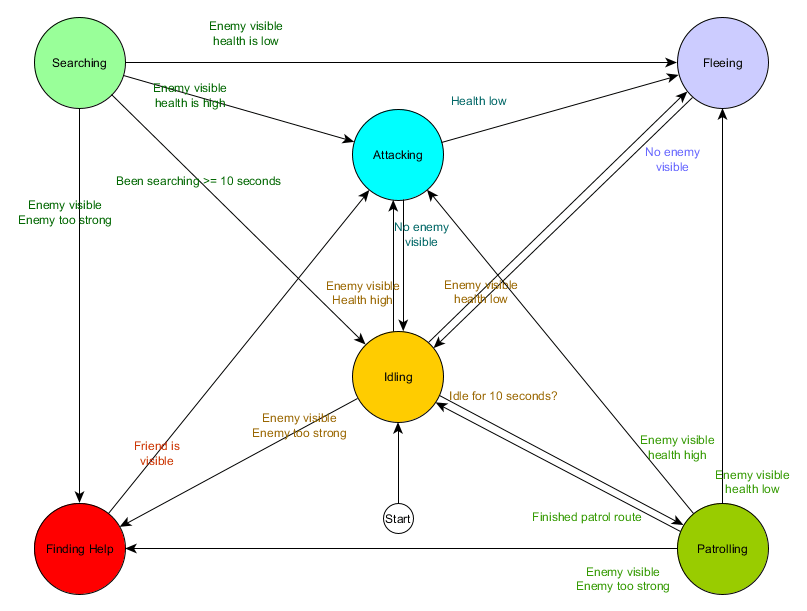

Finite state machine

A finite state machine (FSM) refers to an object, such as an AI agent, which is currently in a certain state, and will later move to another state. Since the total number of states is finite, it is called a "finite state machine". In reality, such examples are traffic lights.

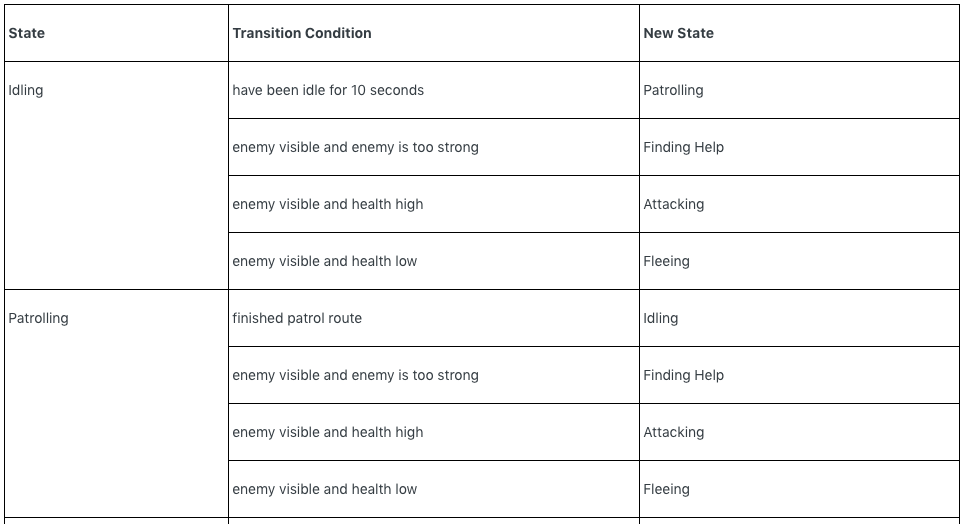

In the game, actions such as patrolling, attacking or fleeing of the guards can be expressed by simple if statements. But if you add state, such as wandering, searching, running for rescue and other actions, the if conditional sentence will become very complicated. Considering all states, we list the transition states needed between each state.

Expressed with a visual diagram:

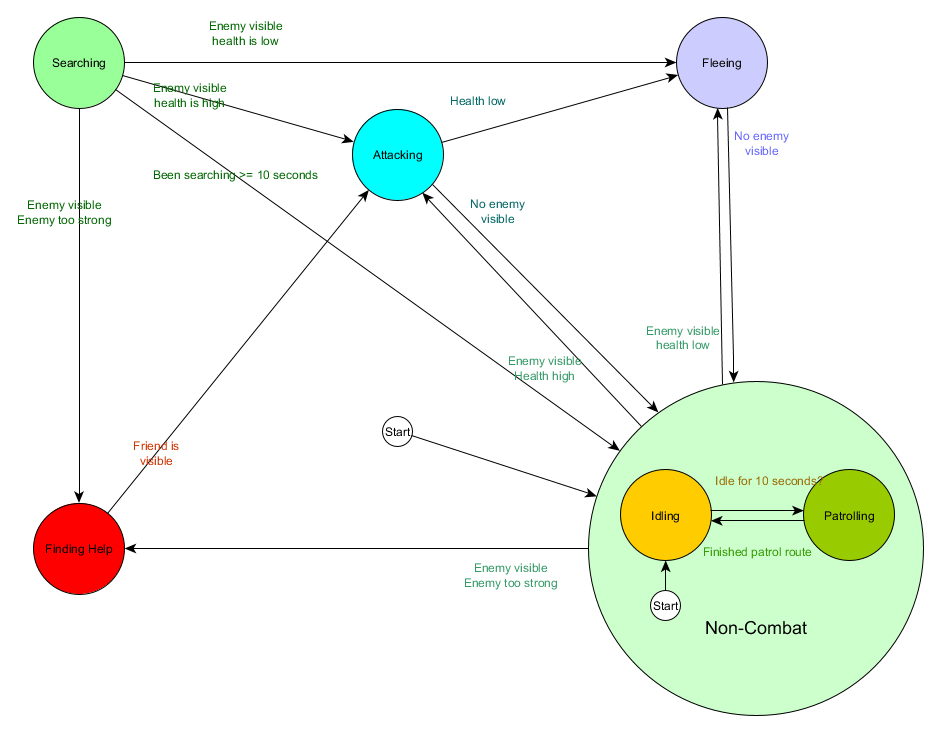

Hierarchical state machine

You may have noticed that some of the transition states in the above table are the same. Most of the transition states in the wandering state are the same as the states in the patrol, but it is best to distinguish them from each other. Wandering and patrolling are part of the non-combat state, so we can regard it as its "sub-state":

Main status

Non-combat state

Visualization

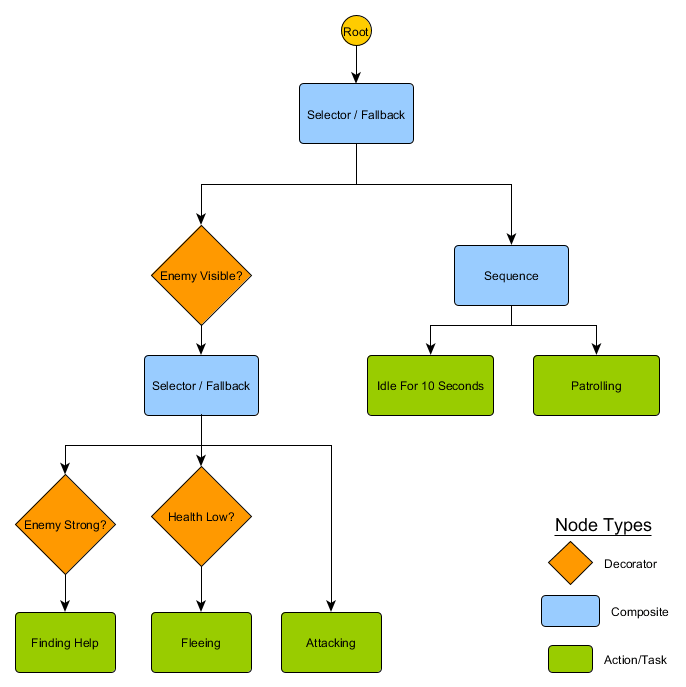

Behavior trees

In the decision-making process, there is a small problem, that is, the transition principle is closely related to the current state. In many games, this method is not a problem, and the use of the above-mentioned level state machine can also reduce repetition. But sometimes you want to have a general rule that does not need to consider state. For example, when the health of the agent is reduced to 25%, you may want to run away. When the designer reduces this value to 10%, you may change all related transition states.

In this case, an ideal state requires a system that determines which states can exist outside of other states in order to be able to transition correctly in one place. This is the behavior tree.

There are many ways to deploy behavior trees, but the core idea is the same: the algorithm starts at a "root node", and each node in the tree represents a decision or action. For example, the guard-level state machine mentioned earlier is represented by a behavior tree:

You may find that there is no excessive return from the patrol state to the wandering state in this tree. At this time, you need to introduce unconditional "duplicate" nodes:

Action and navigation

We have examples of table tennis bats moving against guards, but how do we actually move them over a period of time? How to set the speed, how to avoid obstacles, design the route, etc.? We will explain in detail in this part.

control

From a basic level, we can think that each agent has its own speed and direction of action. They will calculate the speed and direction in the thinking stage and execute it in the action stage. If we know the destination of the agent, we can use the equation to express:

desired_travel = destination_position – agent_position

However, in a more complex environment, simple equations cannot be processed. Perhaps the speed is too slow, and the agent will encounter obstacles in the middle. Therefore, sometimes you need to consider adding other values ​​to make the walking action more complicated.

Find a way

In the grid, if you want to go to the destination, you must first look at the movable grid around. The following figure is a simple search action case. First start searching from the starting point, knowing to find the destination, and then plan the route:

However, this search method seemed too wasteful, and it took a long time to find the best route. The following method only selects the best square in the target coordinate direction each time when finding a way, which can reduce a lot of candidate squares:

Learn and adapt

Although we mentioned at the beginning of the article that machine learning is not commonly used in game AI, we can also learn from it, which may be useful in designing games or adversarial games. For example, in terms of data and probability, we can use a naive Bayes classifier to check a large amount of input data and try to classify it so that the agent can respond appropriately to the current situation. Markov model and so on can be used in prediction.

Knowledge representation

We have discussed various methods of making decisions, planning, and forecasting, but how can we master the entire game world more effectively? How should we collect and organize all the information? How to transform data into information or knowledge? The methods of various games are different, but there are several same methods that can be used.

label

Tagging fragmented information used for search is the most common method. In the code, tags are often represented by strings, but if you know all the tags used, you can convert the strings into unique numbers, saving space and speeding up searches.

Smart goal

Sometimes, tags are not enough to cover all the useful information needed, so another way to store information is to tell AI their alternatives and let them choose according to their needs.

Response curve

The response curve, to put it simply, is a graph, the input is represented by the x-axis, and the virtual value, such as "nearest enemy distance" and the output are represented by the y-axis, usually from 0.0 to 1.0. The figure shows the mapping relationship between input and output.

blackboard

The blackboard, as the name suggests, records the way-finding actions or decisions made by each participant in the game, and other people can also use the data recorded in it.

Image try

Game AI often needs to consider issues such as where to move. Such issues can usually be regarded as "geographical" issues, which need to understand the form of the environment and the location of the enemy. We need a way to take the terrain into consideration and have a general understanding of the environment. The image is a data organization designed to solve this problem.

Conclusion

This article gives a general explanation of the AI ​​used in games. Their usage scenarios are very useful. Some of these technologies may not be common, but they have great potential. Due to the length of this article, we did not introduce each method in detail. Interested students can refer to the original text.

Plastic Bluetooth Charger,Mobile Charger Wireless,Suction Cup Wireless Power Bank,Suction Power Bank

Shenzhen Konchang Electronic Technology Co.,Ltd , https://www.konchangs.com